In PhD and post-doc, I was working on the autonomous navigation of mobile robots and was interested in:

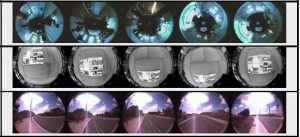

Localization within a memory of omnidirectional images

The localization consists on finding in the visual memory the image which best fits the current image. I proposed a hierarchical process combining global descriptors computed onto cubic interpolation of triangular mesh and patches correlation around Harris corners. The proposed method shows the best compromise by means of 1) accuracy, 2) amount of memorized data required per image and 3) computational cost compared with those obtained from state-of-the-art techniques within three large images data sets.

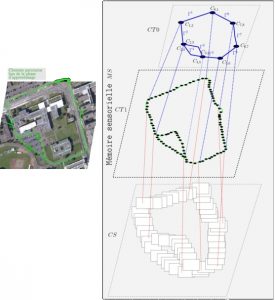

Navigation framework based on a visual memory

When navigating in an unknown environment for the first time, a natural behavior consists on memorizing some key views along the performed path, in order to use these references as checkpoints for a future navigation mission. I have proposed a navigation framework for wheeled robots based on this assumption. During a human-guided learning step, the robot performs paths which are sampled and stored as a set of ordered key images, acquired by an embedded camera. The set of these obtained visual paths is topologically organized and provides a visual memory of the environment. The proposed navigation framework can be divided in three steps:

- visual memory building,

- initial localization: it consists of finding the image which best fits the current image in the visual memory,

- autonomous navigation: given an image of one of the visual paths as a target, the vehicle navigation mission is defined as a visual route. A navigation task then consists in autonomously executing a visual route.

Autonomous navigation of wheeled robots

The autonomous path-following step consists on automatically following this visual route using a visual servoing technique. In the proposed approaches, the control part takes into account the model of the vehicle: the vehicle is controlled by a vision-based control law that is adapted to its nonholonomic constraint. A first approach I proposed, the control problem is formulated as a path following to guide the nonholonomic vehicle along the visual route. The problem of path-following is solved using chained form theory. This control guides the vehicle along the reference visual route without explicitly planning any trajectory. A second approach for visual path following has been developped with Hector Becerra of the University of Saragoza (Spain). It is based on the epipolar geometry. The control law only requires the position of the epipole computed between the current and target views along the sequence of a visual memory.

Autonomous navigation of quadrotor vehicles

During the path-following step, the Vertical Take-off and Landing (VTOL) Unmanned Aerial Vehicle (UAV) is guided along the reference visual route without explicitly planning any trajectory and using a vision-based control law adapted to its dynamic model.

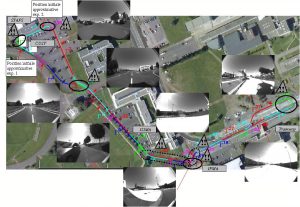

Navigation in large scale environments

Most of the applications of autonomous navigation using a visual memory are restricted to small scale environments (navigation task along trajectories no longer than 500 meters) essentially, because of inefficient memory management. We started developping the Software for Visual navigation SoViN in 2008 at LASMEA with L. Lequievre. This software allows easy memory management (upload, update, removal), memory visualisation and real-time navigation. It is sufficiently generic to allow the prototyping of different visual memory-based navigation methods. Experiments in large-scale environments (size of a campus) validate the proposed architecture.